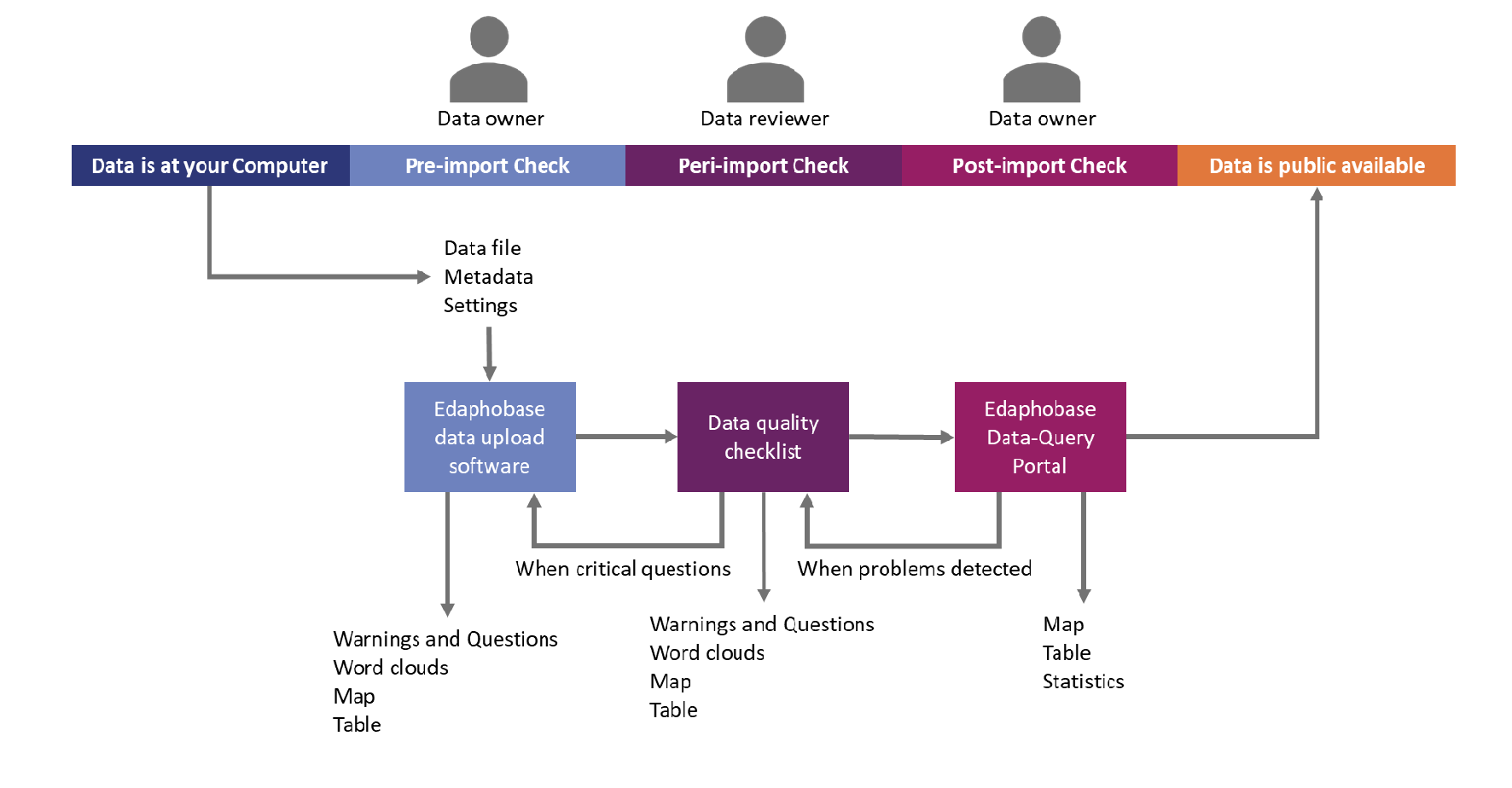

Data quality control

All newly imported data go through a series of quality control procedures, to ensure highest possible data integration and comparability. These procedures (similar to review and revision procedures of a journal manuscript) take some time, so that data import into Edaphobase usually takes more time than simply uploading a “stand-alone” table to a generic data repository.

- Pre-import quality control

The data-upload software automatically undertakes first quality control steps. It maintains that all variable names as well as vocabularies used in categorical variables conform to Edaphobase controlled vocabularies. It thereby avoids typographical errors, including in taxonomic nomenclatures. It ensures that all numeric variables are within possible ranges (i.e., no negative individual counts, soil pH not outside possible limits, etc.). The software furthermore gives the data provider information on the geo-coordinates (in a map view) as well as boxplots and outliers for the content of numeric variables (or word clouds for categorical variables), so that the data provider can check for possible data errors.

- Peri-import quality control

Between data upload and final import into the database, a manual review of the data is undertaken. This checks that, i.e., geo-coordinates are correct, site names etc. are meaningful and descriptive, data is complete and non-redundant, multiple data are not given in one data field (no non-atomized data), data given in one variable are consistent (semantic consistency, no data schizophrenia) or that information is given in the correct data field (structural consistency), and much more. A standardized checklist is used for this procedure.

To save time in this quality-review process (avoiding major data revisions), it is highly recommended that data providers scan this checklist, to ensure that their data conforms to quality standards.

- Post-import quality control

After the above procedures are successfully completed, the data set is finally imported and integrated into the database. At this point it is only accessible by the data provider. A link to the data is sent to the provider, and he/she is lead through various views of the data (from basic summaries, though maps, to data matrices and the raw data tables) in order to confirm that no data or import errors exist. After confirmation by the data provider (or error correction), the data is finally released to public view (based upon the provider’s release authoriza-tions).